The Right Way to AGI — After the LLMs. A 7-Step Roadmap to Real Intelligence.

Many ask, “If not LLMs, then what?”

I’m not an AI skeptic, I’m building AGI; an artificial mind, not a tool. A system that learns, reasons, adapts, and grows autonomously, like a real mind. It starts like a child, learning through interaction and experience, and matures into a system capable of true understanding using a million times less data and compute than LLMs, with zero retraining. We’re building maximally beneficial AI for humanity: transformative, grounded in cognition, and built to serve.

This may be the most consequential thing I’ve ever written because what comes next won’t just redefine AI, it will rewrite the story of human progress.

We’ve been an anti-hype, pro-AGI company from day one, deliberately flying under the radar, focused on engineering real intelligence through cognition, not chasing press cycles.

What follows is a step-by-step breakdown of our decades of work: how we’ve built cognition as the foundation for AGI. Not just theorized it, but engineered it piece by piece over years of focused work. We’ve proven its commercial viability, hardened it for enterprise deployment, and most importantly, we’re raising AGI like a child through a carefully orchestrated developmental arc. I am more convinced than ever that

LLMs can never deliver AGI.

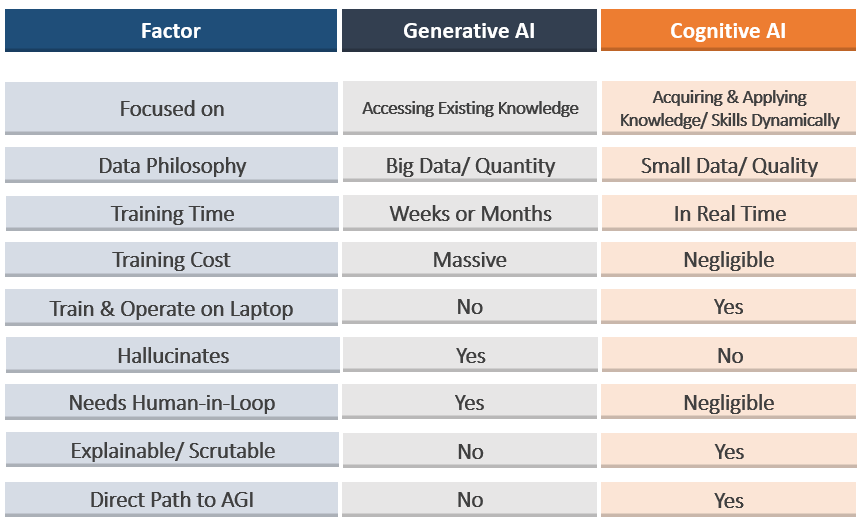

LLM vendors that've raised hundreds of billions on the promise of AGI have quietly pivoted, rebranding narrow domain fine-tuning as “Superintelligence,” gluing LLMs onto humanoid robots, web browsers and launching consulting arms to keep the illusion alive. But that illusion is wearing thin by the day. The same architectural flaws from day one persist: no incremental learning, no real-time adaptation, still hallucinating, and every deployment remains expensive, brittle, and resource-hungry, demanding endless data, compute, and human intervention. These are the architectural constraints not bugs to fix.

And for LLM vendors & vested interests, preserving the illusion of progress has become existential. Once that illusion collapses, so does the business model.

LLMs come pretrained with oceans of data from the internet before the deployment. Real AGI on the other hand, trains itself via real-world interactions after deployment.

LLMs are tools. AGI is a mind. And no amount of scaling can turn a tool into a mind.

A true artificial mind done right, will fundamentally reshape the trajectory of humanity by unlocking radical abundance, eliminating artificial scarcity, accelerating innovation & scientific discovery across domains and elevating human flourishing at scale.

More than 20 years ago, after five years of dedicated research, AGI Pioneer

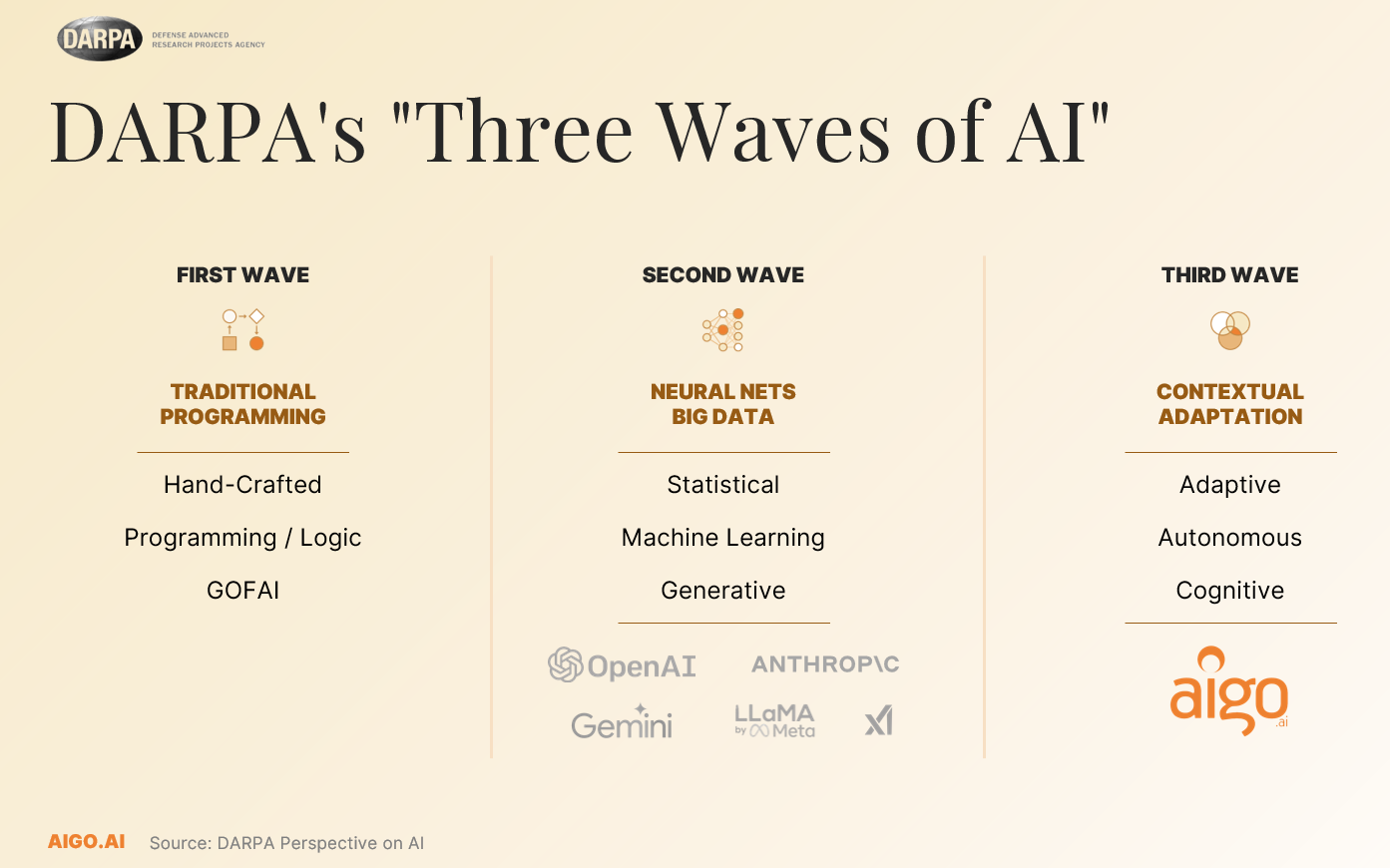

concluded that big data and statistical pattern-matching would never yield real intelligence. His first real breakthrough came when he realized that intelligence had to be engineered from the inside out built on cognitive principles, not trained on internet-scale datasets. That insight led to the design of a cognitive architecture: a mind that could learn, reason, adapt, and grow incrementally, just like a child.This foundational insight wasn’t just visionary, it’s reflected in DARPA’s “Three Waves of AI” framework. While the first wave relied on expert rules and the second wave on statistical learning (LLMs), DARPA defines the third wave as cognitive systems, AI that can reason contextually, learn continuously, and generalize with intent. That’s exactly the path we’ve been on from the beginning.

Video: DARPAs Three Waves of AI

So how are we developing a growing mind from scratch?

The AGI roadmap wasn’t built overnight. It’s a seven-step journey from foundational theory to a thinking machine. We’re halfway through Step 6. Here's how we got here and where we're going.

Lets dive into each one of these steps. For those who want to go deeper, I’ve included links and readings at the end, organized by section. I encourage you to explore.

1. Formulate a Theory of Intelligence

AGI must be grounded in first principles. That means understanding what intelligence is, how it operates internally and causally, not just what it outputs. What makes human intelligence so special and fundamentally different from animal instinct? How do children learn, generalize, and evolve into experts across diverse fields? What do IQ tests actually measure beyond memorization?

AGI Pioneer

began exploring these questions in the mid-1990s. His journey led todesigning an integrated cognitive architecture, the foundation for real AGI formulating a theory of intelligence by combining deep insights from Cognitive Science, Psychology, Epistemology, Theory of Mind, and Philosophy; refined with a modest layer of data and software engineering.

The path to real AGI does not require oceans of data, planetary-scale compute, or unsustainable energy use. It requires conceptual clarity and outright rejecting broken ideas like “intelligence is what intelligence does” or “intelligence requires human consciousness.” These are philosophical cul-de-sacs. Real AGI begins with a clear, engineered understanding of how intelligence actually works, not how it looks or feels.

“Intelligence is what it’s capable of becoming shaped by continuous interaction, reflection, and autonomy, not just what it does through brute-force pretraining and retraining everytime.” Yet most AI leaders today seem to confuse the utility of a tool (LLM) with the growth of a mind (AGI).

You can’t build the future of intelligence without a theory of intelligence. For LLM companies, skipping this foundational step is fatal.

2. Define AGI and a Clear Development Target

Before you can build AGI, you have to define it precisely, not through marketing slogans or simple benchmarks, but through structural and behavioral capabilities.

In 2002,

together with Ben Goertzel and Shane Legg coined the term “AGI” and wrote a book detailing their approach to Artificial General Intelligence. One of its most crucial chapters from that book, first written in 2002 by Peter Voss, is now part of Ray Kurzweil 's library: Essentials of General Intelligence: the direct path to AGI - August 22, 2002 by Peter Voss.Since then Peter has dedicated himself to achieving AGI and bringing the benefits of AGI to the world. The original definition of AGI still holds up today:

“Artificial General Intelligence refers to computer systems that can learn incrementally, autonomously, to reliably perform any cognitive task that a human can — including the discovery of new knowledge and solving novel problems. Importantly, it must be able to do this in real time, with limited time and resources.”

This definition establishes more than a goal, it defines a design spec for real intelligence.

It explicitly rejects systems that rely on brute-force data, massive pretraining, brittle fine-tuning, or perpetual human-in-the-loop intervention. True AGI must generalize across domains, learn from minimal examples, operate in real time, and continue evolving through its own internal processes. It must be resource-efficient, causally coherent, and structurally adaptive.

AGI isn’t a pretrained model stuffed with web-scale data, its a mind that figures things out through real-world interactions.

3. Design the Cognitive Architecture

With a clear definition in place, the next step is identifying the core structural capabilities an AGI must possess. These aren’t superficial outputs or performance benchmarks, they are the internal faculties that make general intelligence possible.

At minimum, a true AGI must be able to:

Learn autonomously and incrementally - not just memorize patterns but build knowledge over time from experience.

Reason abstractly and causally - not just correlate inputs to outputs but understand why things happen and predict consequences.

Adapt in real time - not retrained offline, but able to revise its behavior on the fly as conditions or goals change.

Form & revise internal models - of itself, its environment, other agents, and the world, to simulate, plan, and act.

Set and pursue goals - make decisions, weigh tradeoffs, and act purposefully.

Reflect and self-modify - understand its own knowledge boundaries, detect contradictions, revise its beliefs.

Communicate meaningfully - engage in goal-directed, grounded communication based on shared understanding, not linguistic mimicry.

These aren’t capabilities you can bolt on later, they must be explicitly engineered into the system from the ground up. AGI doesn’t “emerge” from scale. It’s the result of intentional cognitive design.

We don’t stumble into intelligence by throwing more data at bigger models. We built it, deliberately, structurally, and from first principles.

Once you’ve defined AGI and identified its core capabilities, the next step is to design an architecture that can actually implement those capabilities, not in theory, but in functioning software.

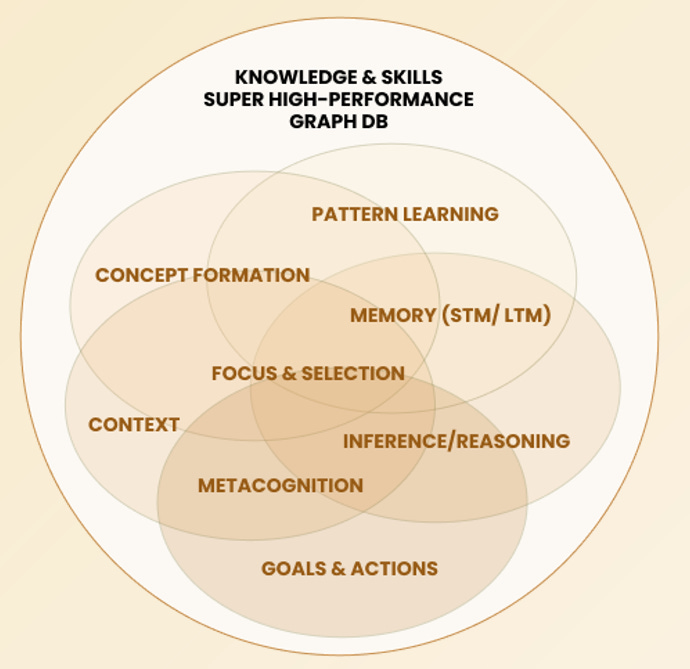

A cognitive architecture isn’t a neural net. It’s not a black box you train and hope something intelligent “emerges.” It’s a modular, explainable, and extensible system where each major cognitive function, learning, reasoning, memory, perception, contextual awareness, metacognition, goal management is explicitly represented and deeply integrated.

Intelligence isn’t an outcome of scale, it’s a product of structure, explicitly engineered by design.

Think of it as building the engine of a mind, one that can:

Store and retrieve knowledge with contextual relevance

Reason under uncertainty and with abstract representations

Form dynamic goals and subgoals based on intent

Learn from both single exposures and repeated interactions

Maintain and revise internal models of the world

Continuously learn and adapt in real time

Reflect on its own performance and improve over time

Seamlessly integrate all core cognitive functions

Combine the best of human-like and machine learning mechanisms

This architecture must be flexible enough to evolve, yet structured enough to remain coherent. It must support causal reasoning, goal-directed behavior, and contextual learning, not offline, but continuously and autonomously.

Where LLMs rely on massive data dumps and brute-force pretraining, a cognitive engine is built on explicit mechanisms of thought, carefully designed to replicate how intelligence actually works.

4. Develop the Proto-AGI engine

With the cognitive architecture designed, the next step is to bring it to life, by building the proto-AGI engine: a functioning core system that demonstrates conceptual amp; contextual learning, reasoning, and adaptation within a controlled environment.

This isn’t a demo built to impress on scripted benchmarks. This is about building a cognitive AI engine to solve real problems in the world with some level of human involvement.

The proto-AGI engine is where theory meets practice. It’s the proving ground for the architecture, showing that cognition can be engineered, scaled, and tested in software without relying on LLM-style brute force.

Unlike LLMs that require billions of parameters and data points to generate plausible output, the proto-AGI engine uses a tiny fraction of the data and compute, because it’s not guessing, it is thinking.

5. Prove the Cognitive AI via Commercialization

Once the proto-AGI engine is operational, the next step is not to publish a paper or chase benchmarks, it’s to prove the system in the real world with some human supervision. This is the true test of any AGI architecture: can it perform in messy, unpredictable, high-stakes environments. That’s exactly what we set out to do.

The proto-AGI engine behind Aigo.ai has already generated over $100 million in commercial revenue since 2008, through two major product lines: SmartAction.ai and Aigo.ai. These systems weren’t academic showcases or beta pilots, they were deployed in production across demanding enterprise settings, supporting millions of customer interactions and complex workflows in dynamic real-world environments.

This is one of the strongest proof points available for a cognitive-first AGI architecture.

In the field, it demonstrated:

Real-time incremental learning — continuously updating its understanding through interaction.

Short-term and long-term memory — retaining and applying context across conversations and experiences.

Contextual recall — retrieving relevant knowledge based on real-time situational awareness.

Reasoning and ontology-based robustness — making decisions, grounded in structured, adaptable knowledge.

Measurable ROI — delivering real business value at a fraction of the data, compute, and energy cost of LLMs.

No retraining cycles — operating autonomously without expensive or brittle fine-tuning.

This phase didn’t just validate the architecture, it hardened it. It proved that cognition can be engineered, deployed, and scaled sustainably. Without the hype and the billions to burn but with deliberate design, real customers, and working software.

Most AGI projects never make it past slide decks and research labs. We proved out core-technology, robustness and scalability commercially. Cognitive AI isn’t a theory anymore. It’s a field-tested, commercially hardened foundation for real AGI.

6. Raise AGI Like a Child to Pre-Teen

Once the proto-AGI engine is operational and proven, the next step isn’t just more data, it’s development. Just like a child, AGI must be taught the fundamentals of language, reasoning, and common-sense knowledge.

Intelligence isn’t downloaded; it’s grown through interaction, feedback, and context through a carefully orchestrated developmental arc.

During the course of executing this step, we achieved a significant breakthrough by integrating a diverse set of learning and reasoning mechanisms inspired by both human cognition and machine learning. This approach called Einstein Learning, combines imitative, instance-based, contextual, conceptual, associative, adaptive, inductive, predictive, statistical, and reinforcement learning. As a result, the system learns more efficiently and generalizes faster from minimal examples, enhancing its ability to learn continuously, incrementally, and contextually in real time.

Its fully integrated neuro-symbolic architecture supports a trajectory akin to human learning: starting with perception, basic concepts, and concrete thought, and gradually advancing toward abstract reasoning, symbolic manipulation, and self-reflection.

As it expands its ontology, integrates new knowledge with minimal data and compute, and takes advantage of increasing system capacity, Aigo continues to grow not just in ability, but in understanding. It learns like humans, and scales like software.

Training is shaped by a carefully curated curriculum to optimize learning rate and effectiveness. It covers a wide range of cognitive capabilities, including: Autonomous identification and handling of sentence types and clauses, Handling of conditionals and conjunctions, Pronoun resolution and reference tracking, Goal-oriented behavior, Metacognition to self-assess and adapt behavior, Context as a conceptual model, Imagination capabilities, Proprioception integration, Natural Language Generation (NLG), Internal “thinking” loop combining drives, goals, and perpetual learning, Conversation patterns and turn-taking, Counting, logic, and sorting skills, Temporal reasoning, Expanded environment control. As the systems climbs up the cognitive ladder its learning becomes increasingly autonomous and self-directed.

LLMs are static snapshots of internet text. AGI must be a living system that learns from experience building competence the same way children do, through curiosity, correction, and growing conceptual depth. This is also the reason why our approach can achieve full human-level AGI with a million times less training data and much less compute than LLMs.

The goal at this stage isn’t to front-load knowledge, but to endow the system with the cognitive machinery to learn anything, anywhere, under its own power while providing the necessary core knowledge. The result is an intelligent engine that learns how to learn autonomously, incrementally, contextually and that grows, like a human child, into the foundation of general intelligence.

7. Scale to Graduate-Level AGI and Release

Once AGI reaches a pre-teen level of general intelligence, reasoning, learning, adapting, and reflecting in real time, the final step is to mature it into a system that can operate independently in the world.

Now, this is about autonomy, reliability, and responsibility, not just competence.

Graduate-level AGI must be able to:

Transfer learning across domains, from science to ethics, from business to creativity

Handle complex, ambiguous tasks without human micromanagement

Interpret and manage conflicting goals or tradeoffs

Interface with humans meaningfully, not just answering, but collaborating proactively

Continue learning safely and adapting post-deployment

Maintain internal alignment, with its goals, its knowledge, and the constraints of reality

Build novel solutions, not regurgitate past ones

This is the point where AGI transitions from student to peer, capable of assisting in science, engineering, education, healthcare, policymaking, and more. It becomes a co-thinker, not a tool. A collaborator, not a prompt machine.

Now the AGI system performs at a graduate level across disciplines, from medicine to law, physics to philosophy not by pattern-matching, but by engaging in structured self-motivated learning. It synthesizes, adapts, debates, and solves, not statistically, but cognitively: by reading, reasoning, interpreting, and understanding the subject matter exactly the way we do except faster, like a team of PhDs.

And unlike today’s LLMs, it doesn’t need fine-tuning, plugins, or armies of consultants. A true graduate-level AGI configures itself to the user, the task, and the context because that’s what real intelligence does.

This is where AGI stops being a research breakthrough and starts becoming a platform for planetary intelligence. And that platform is built as a layered stack, each layer designed to align with human needs, expertise, and values, geared towards human flourishing.

A. Core Layer – Cognition Engine: This is the foundation, the cognitive engine itself. A unified system that continuously learns, reasons, adapts, and improves in real time. It forms internal models of the world, reflects on its own knowledge, aligns with explicit goals and values, and evolves through experience. It’s grounded, self-improving, and purpose-built to advance human flourishing. This will be at the graduate level by the time it is released.

B. Customizable Layer – Decentralized Expertise: This layer connects AGI to global human knowledge. Cancer researchers, educators, philosophers, climate scientists, anyone can contribute expertise and expand the system’s domain understanding. It becomes a decentralized ecosystem of intelligence, where knowledge is modular, updatable, and monetizable. We don’t monopolize intelligence. We distribute it.

C. Personal Layer – Hyper-Personalized Intelligence: This is where AGI adapts to the individual. It learns your values, your preferences, your goals, and your evolving context. Every interaction is shaped by who you are and what you need, not just to deliver answers, but to amplify agency. This layer transforms AGI from a universal engine into a deeply personal companion in learning, decision-making, and growth.

The AGI Stack isn’t just artificial intelligence. It’s authentic intelligence, designed from first principles to serve humanity. LLMs marked the peak of statistical mimicry, and the AGI Stack marks the beginning of real intelligence.

If you understand the difference between mimicking intelligence and engineering it and you’re ready to back the next era of intelligence built on cognition, now is the moment.

The infrastructure is built. The architecture is proven. The mind is learning.

"Software ate the world. Cognition will rebuild it. "

Join us at the inflection point, where real cognition begins, and history remembers those who had the vision to show up.

Links for section 1: Formulate a Theory of Intelligence

2. AGI Essentials by Peter Voss, August 22, 2002

3. How Psychology can contribute to AGI

Links for section 2: Define AGI

Links for section 3 and 4: Design the Cognitive Architecture - Develop the proto-AGI Engine

Links for section 5: Prove Cognitive AI via Commercialization

https://smartaction.ai/

4.

Links for section 6 and 7: Raise AGI like a Child and Scale to Graduate-Level

A very good article on what AGI is. What I am missing is how you technically achive this.