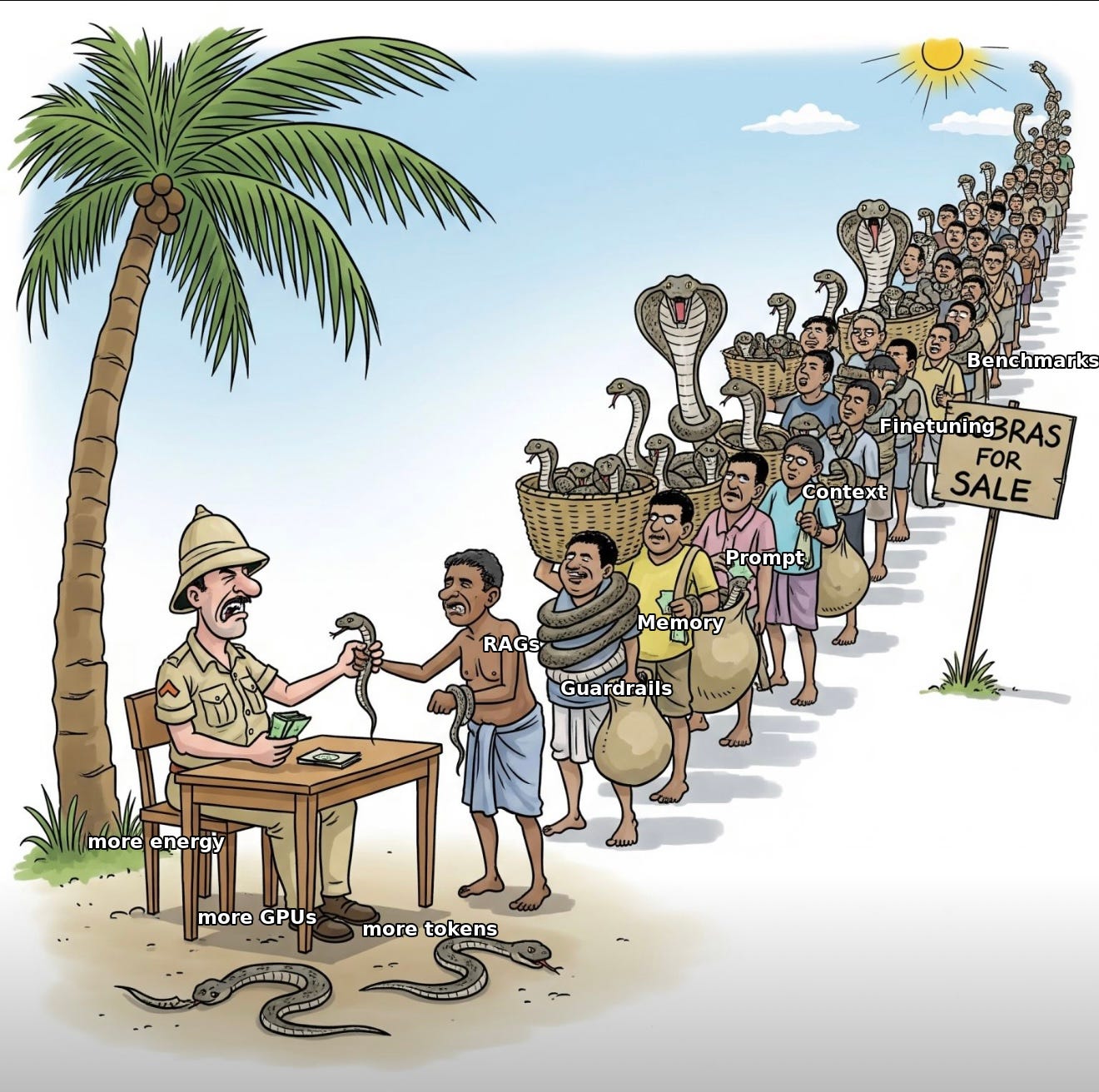

The GenAI's Cobra Effect

Cognition-First, or Cobras Forever.

During the British Raj, officials were alarmed by venomous cobras in Delhi. To fix it, they paid a bounty for every dead snake. It worked, until it didn’t. Locals began breeding cobras for cash. When the program was killed, breeders dumped their inventory and the city wound up with more cobras than before. Incentives turned the cure into a cobra factory.

We’re replaying the same farce in GenAI.

GPUs up. Watts up. Patches up. Tokens up. Theater up. Emissions up. E-waste up. Costs up. Cobras up. Human hours torched. Attention drained. Trust eroded. Reliability: still missing. Real intelligence: still missing.

That’s GenAI’s Cobra Effect.

Bounties breed cobras. (Bounty: Funding + Gamed/ Vanity Metrics)

Breeders chase the cash. (Breeders: LLM Vendors + Ecosystem)

Cobras = the core problems of GenAI and its fixes we keep multiplying: hallucinations, brittleness, RAG dependency, fine-tune overfitting, guardrail theater etc. We see them, panic, and try to fix them. But instead of changing the core architecture, we fund patches:

RAGs to “ground” answers

Fine-tunes to hit benchmarks

Guardrails to mask failure

Synthetic data to scale faster

Every fix becomes a breeding program.

More Patches → More Complexity → More Tokens → More GPUs → More Watts → More Cost → More Snakes → Bigger Bounty.

Incentives intended to solve the problem end up scaling them. Yet we keep paying for the wrong fixes.

So what’s the real fix?

not more tokens. not more patches. not more watts. not more GPUs. not more hacks (Prompt, Memory, Context) not more funding

It’s architectural.

Replace the LLM core with a Cognitive AI engine that:

learns incrementally,

adapts autonomously and

updates its model in real-time cascading changes across its beliefs, behaviors, actions and understanding.

Until then, we’re just throwing cash and GPUs at a snake pit.

Breeders keep farming the snake pits until incentives flip. Kill the bounties. Fund Cognition-First, or Cobras Forever.

Dig Deeper: